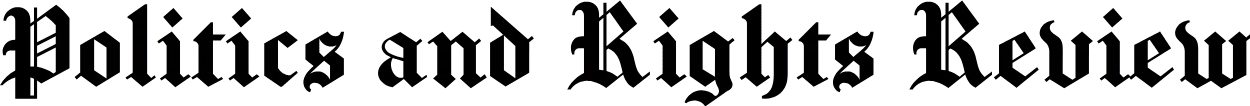

The digital revolution, while bringing about unprecedented connectivity and information dissemination, has also ushered in an era where the authenticity of information is constantly under siege. Central to this dilemma are AI bots, which have become adept tools in the arsenal of those seeking to manipulate public sentiment. These AI bots, equipped with advanced algorithms, can mimic human interactions, making them virtually indistinguishable from real users on social media platforms and discussion forums.

Disinformation, or the deliberate spread of false information, isn’t a novel concept. However, the efficiency and scale at which it can now be propagated are unparalleled. Political AI bots amplify this disinformation, targeting specific demographics, tailoring messages to exploit biases, and creating echo chambers that reinforce false beliefs. Over time, these manipulated narratives can skew public perception, influencing political decisions and election outcomes.

Democracy, in its essence, thrives on informed choice and the free exchange of ideas.

The danger lies not just in the spread of falsehoods, but in the erosion of public trust. As people grapple with discerning fact from fiction, faith in democratic institutions and processes can wane. The very pillars of democracy—open dialogue, informed decision-making, and the pursuit of truth—are at risk.

Addressing this challenge is paramount. It requires a collaborative effort between tech companies, regulatory bodies, and the public. Enhanced detection mechanisms, digital literacy campaigns, and stringent regulations are vital steps towards safeguarding the integrity of our democracies in the face of these silent manipulators.

The Evolution of Disinformation

Disinformation, at its core, is the intentional spread of misleading or false information. Its roots trace back to ancient civilizations, where rulers and generals would use deceptive tactics to gain advantages in politics and warfare. For instance, during wartime, false information about troop movements or strategies would be disseminated to confuse and mislead the enemy. In the realm of politics, rumors and false narratives were spread to discredit opponents or to rally support.

However, the means and methods of spreading disinformation have evolved dramatically over the centuries. The advent of print media in the 15th century, and later the proliferation of radio and television in the 20th century, provided powerful platforms for propaganda. Governments, corporations, and other entities could broadcast their narratives to large audiences, shaping public perception on a grand scale.

AI bots can be deployed to spread propaganda, discredit opponents, or amplify specific narratives.

Enter the digital age, and the landscape of disinformation underwent another seismic shift. The internet, with its decentralized nature, provided a platform where anyone could become a broadcaster. Social media platforms further accelerated this trend, allowing for the rapid and widespread dissemination of information, both true and false. The barriers to entry were lowered, and suddenly, creating and spreading disinformation required little more than an internet connection.

AI bots have added another layer of complexity to this scenario. These bots can generate, share, and amplify disinformation at an unprecedented rate. They can tailor messages to specific audiences, exploit existing biases, and create echo chambers where false narratives are continuously reinforced. The anonymity of the digital realm also makes it challenging to trace the origins of these campaigns, allowing malicious actors to operate with relative impunity.

AI Bots: The New Foot Soldiers

In the early days of the internet, bots were rudimentary programs, designed to automate mundane tasks. From web scraping to automated responses, their functions were limited and easily distinguishable. However, as technology advanced, so did the capabilities of these bots. The infusion of artificial intelligence transformed them from mere scripts into sophisticated entities capable of complex interactions.

Today’s AI bots are a far cry from their predecessors. With machine learning at their core, they can analyze vast amounts of data, learn from interactions, and adapt their responses accordingly. This learning capability enables them to engage in meaningful conversations, often indistinguishable from human interactions. On social media platforms, they can share, comment on, and amplify content, influencing the flow of information and shaping online narratives.

Elections, the very heartbeat of democratic processes, are increasingly under siege.

But it’s their ability to mimic human behavior that truly sets them apart. By analyzing online trends, they can craft messages that resonate with specific audiences, participate in trending discussions, and even initiate conversations that steer public opinion. This camouflage allows them to operate covertly, making it challenging to distinguish between genuine human interactions and bot-driven engagements.

The implications of this are profound. In the realm of politics, for instance, AI bots can be deployed to spread propaganda, discredit opponents, or amplify specific narratives. They can sway public opinion, influence election outcomes, and deepen societal divides. Moreover, because they operate in the shadows, their true motives and the entities behind them often remain concealed, adding a layer of complexity to the challenge of combating disinformation.

AI Bots: The Impact on Democracy

Democracy, in its essence, thrives on informed choice and the free exchange of ideas. It relies on the collective wisdom of its citizens, who, armed with accurate information, make decisions that shape the future of their societies. However, the digital age, with its blend of unchecked disinformation and sophisticated AI bots, poses a significant threat to this foundational principle.

Elections, the very heartbeat of democratic processes, are increasingly under siege. In a world where information travels at the speed of light, misleading narratives, often amplified by AI bots, can rapidly permeate the public consciousness. Undecided voters, seeking clarity, can easily fall prey to these falsehoods, leading them to make decisions based on distorted realities. The ripple effect of this can be catastrophic. Election outcomes, instead of reflecting the genuine will of the people, may become a manifestation of manipulated narratives.

Parallel to technological solutions, governmental intervention is crucial.

But the impact of disinformation extends beyond the ballot box. Societal divides, often latent, can be exacerbated by false narratives that play on existing biases and fears. Over time, these divides can morph into chasms, threatening social cohesion and undermining the very fabric of democratic societies.

Furthermore, the pervasive nature of disinformation erodes public trust, not just in electoral processes, but in institutions at large. When citizens begin to question the credibility of scientific research, judicial decisions, or even the daily news, the pillars of democracy start to waver. The concept of objective truth, once held sacrosanct, becomes mired in a quagmire of doubt and skepticism.

A Call to Action

In the face of the digital age’s challenges, particularly the pervasive threat of disinformation, passive observation is no longer an option. The very tenets of democracy, truth, and public trust are at stake. As such, a concerted, multi-pronged response is imperative to safeguard the integrity of our societies.

At the forefront of this battle are tech companies, the architects of the platforms where much of this disinformation is propagated. Their responsibility is twofold. Firstly, they must continually refine and enhance their detection algorithms, ensuring that AI bots and misleading narratives are swiftly identified and curtailed. Secondly, there’s an urgent need for greater transparency. The inner workings of algorithms, often shrouded in mystery, must be made more transparent, allowing users to understand how information is filtered and presented to them.

Parallel to technological solutions, governmental intervention is crucial. While the sanctity of free speech remains paramount, there’s a pressing need for regulations that delineate between genuine expression and malicious disinformation campaigns. Striking this balance is delicate but essential to ensure that democratic processes aren’t compromised under the guise of free expression.

However, the most potent defense against disinformation lies with the public. An informed and discerning citizenry is democracy’s strongest bulwark. Education initiatives, focusing on digital literacy and critical thinking, must be prioritized. By equipping individuals with the skills to question, analyze, and discern truth from falsehood, societies can foster a populace resilient to the allure of misleading narratives.

In Conclusion

The digital age, with its myriad of advancements, has also ushered in complex challenges, chief among them being the specter of disinformation amplified by AI bots. This isn’t merely a battle of algorithms or technological prowess; it’s a profound reflection of our society’s ability to withstand and counter threats to its foundational principles.

Our democracies, built on the bedrock of informed choice and trust, are at a pivotal juncture. The choices we make now, both individually and collectively, will shape the trajectory of our democratic institutions for generations to come. It’s not just about countering false narratives or detecting sophisticated AI bots; it’s about reaffirming our unwavering commitment to truth, transparency, and open dialogue.

As we navigate this digital landscape, our collective vigilance becomes our greatest asset. Every individual, equipped with knowledge and discernment, becomes a sentinel guarding against the tide of falsehoods. Our shared responsibility is to ensure that the digital tools at our disposal serve to enlighten, not deceive.

In the face of this challenge, let us remember that the strength of our democracies lies not in the technology we wield, but in our unwavering commitment to truth and the collective will to safeguard it.