The Double-Edged Sword of AI’s Impact

AI’s impact on freedom of expression is a subject of growing importance that presents a complex interplay of benefits and challenges. On one hand, AI technologies have revolutionized the way we interact with digital platforms. They offer unparalleled opportunities for content personalization, making our online experiences more engaging and relevant. Algorithms sift through vast amounts of data to curate content that aligns with our interests, preferences, and past behaviors. This level of personalization has made it easier than ever to find information that resonates with us, streamlining our access to news, opinions, and various forms of entertainment.

However, this technological marvel doesn’t come without its drawbacks. The very algorithms that personalize our digital experiences also raise serious ethical and legal questions. They can inadvertently create ‘filter bubbles’ and ‘echo chambers,’ isolating us from differing viewpoints and reinforcing our pre-existing beliefs. This has profound implications for democratic discourse, potentially polarizing societies and limiting healthy debate. Moreover, AI’s role in content moderation opens up another Pandora’s box of issues, including unjust censorship and the suppression of free speech.

This article aims to dissect the intricate relationship between AI and freedom of expression, shedding light on both its empowering capabilities and its ethical pitfalls.

The Paradox of Content Personalization

AI-driven content personalization has fundamentally transformed our digital lives. By using complex algorithms that analyze our online behavior, preferences, and interactions, these technologies curate a personalized feed of content that seems almost psychic in its accuracy. The benefits are immediate: users find themselves more engaged, spending more time on platforms that seem to ‘know’ them. This level of customization enhances user experience, making each interaction feel unique and tailored to individual needs.

When AI algorithms decide what content we see or don’t see, they directly impact this fundamental right.

However, this personalized experience is not without its drawbacks. The very algorithms that make our online lives more convenient also create ‘filter bubbles’ and ‘echo chambers.’ These terms describe the phenomenon where the AI system isolates us within a comforting but narrow sphere of information.

This limitation restricts our exposure to diverse viewpoints and information, thereby creating a skewed perception of reality. In this self-affirming loop, our existing beliefs and opinions are not just maintained but constantly reinforced.

The paradox lies in the tension between the user-centric benefits of personalization and the societal costs of reduced informational diversity. While we enjoy an online experience that feels intimately our own, we may be sacrificing the richness of a more varied and comprehensive understanding of the world around us.

The Ethical Quagmire of Content Moderation

AI’s role in content moderation is both indispensable and problematic. On one hand, AI systems are efficient at scanning vast amounts of data to flag and remove content that violates platform rules or legal norms. This is crucial for maintaining a safe and respectful online environment, especially given the sheer volume of content uploaded every minute. Human moderators alone would be overwhelmed by the task, not to mention the psychological toll of reviewing disturbing content.

Users are often unwittingly subjected to algorithmic influence without their explicit consent

However, the limitations of AI in this context are glaring. AI systems often struggle to understand the context in which words or images are used. They find it challenging to differentiate between satire and actual hate speech, or to consider the cultural nuances that might change the meaning of a phrase or symbol. This leads to a high risk of both ‘false positives’ and ‘false negatives.’ In the case of false positives, legitimate content may be unjustly censored, stifling free expression and potentially impacting public discourse. False negatives, on the other hand, allow harmful or illegal content to slip through the cracks, perpetuating hate, misinformation, or even criminal activity.

The ethical quagmire here is evident. While AI offers a scalable solution for content moderation, its limitations can result in unjust censorship or the perpetuation of harmful content. The stakes are high, involving not just individual rights but the health of public discourse and, ultimately, the functioning of democracy itself.

Legal and Human Rights Landscape

The use of AI in shaping our online experiences doesn’t just raise ethical questions; it also has significant legal implications. International human rights law is clear on the matter of freedom of expression. It doesn’t only protect the freedom to impart information but also underscores the right to receive it. When AI algorithms decide what content we see or don’t see, they directly impact this fundamental right.

The legal framework around freedom of expression requires any restrictions to be prescribed by law, serve a legitimate aim, and be necessary in a democratic society. AI’s role in content personalization and moderation must align with these principles. It raises critical questions about legality, legitimacy, and proportionality in the restriction of freedom of expression. For instance, if an AI system excessively removes or blocks content, it could be seen as an unlawful interference with freedom of expression.

Moreover, the lack of a clear legal framework for AI’s role in content moderation and personalization complicates matters. Social media platforms are often encouraged to remove illegal content voluntarily, without a clear legal basis. This lack of legal clarity can lead to arbitrary decisions that may not withstand judicial scrutiny, further muddying the waters of what is already a complex issue.

The Council of Europe’s Warning

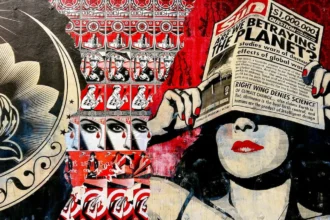

The Council of Europe has sounded the alarm on the manipulative capabilities of AI in shaping public opinion and individual behavior. This is not just a theoretical concern; it’s a pressing issue that affects how we interact with information online. The Council points out that AI systems can subconsciously influence our emotions, thoughts, and even our ability to make independent decisions.

This warning gains added weight when you consider that many online platforms do not offer an opt-out feature for AI personalization. In other words, users are often unwittingly subjected to algorithmic influence without their explicit consent. This raises ethical and legal questions about user autonomy and the right to form opinions without undue interference.

The Council of Europe’s warning serves as a crucial reminder that while AI has the potential to enrich our online experiences, it also has the power to manipulate and constrain them. Therefore, it’s imperative that regulatory frameworks are put in place to safeguard individual autonomy and freedom of expression in the age of AI.

The Need for a Legal Framework

The lack of a comprehensive legal framework governing AI’s role in content moderation and personalization is a glaring gap that needs urgent attention. Currently, social media platforms are largely left to their own devices, encouraged to self-regulate their content. While this may seem like a practical solution, it leads to significant issues of accountability and transparency.

This self-regulatory approach can have unintended consequences, such as excessive censorship or the removal of content that is crucial for public debate. Such actions not only infringe on individual freedoms but also undermine the pillars of democratic society.

Therefore, the need for a legal framework is not just a bureaucratic requirement but a fundamental necessity to ensure that AI serves the public interest without compromising individual rights and freedoms. Establishing such a framework would provide clear guidelines for platforms, setting the boundaries for what is permissible and what is not, while also offering avenues for redress for those who feel their rights have been violated.

Conclusion: Striking a Balance

Striking a balance between the benefits of AI and the preservation of human rights is a complex but essential task. AI’s impact on freedom of expression offers both opportunities and challenges. On one hand, it can significantly enhance our online experience by personalizing content and moderating harmful material. On the other, it poses ethical and legal dilemmas that can’t be overlooked.

The absence of a robust legal framework exacerbates these challenges, leading to a murky environment where the lines between permissible and impermissible are blurred. This lack of clarity can result in violations of fundamental rights, including the freedom of expression.

Therefore, as we continue to integrate AI into our digital lives, the call for clear legal frameworks and ethical guidelines becomes increasingly urgent. These frameworks should aim to protect individual freedoms while allowing room for technological innovation. Only by achieving this delicate balance can we ensure that AI serves as a tool for empowerment rather than a mechanism for control.

Adapted from an academic study for a wider audience, under license CC BY 4.0