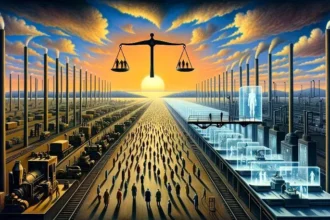

Democracy is built on the idea that law, not code, governs human life. Yet as artificial intelligence takes on increasingly normative functions—ranking speech, predicting behavior, filtering content, allocating resources—it begins to reshape what counts as legitimate, acceptable, or true.

These operations resemble political decisions, shaped by priorities that remain opaque and insulated from democratic oversight. They are political decisions made by systems that are unelected and often unaccountable.

The danger lies in substituting informed consent with silent acquiescence, and political legitimacy with procedural credibility.

This article explores a critical shift: AI is no longer just a tool used within democratic societies; it is becoming a site of norm production that rivals democratic institutions themselves. As systems of governance migrate from parliaments to platforms, from constitutions to algorithms, the question arises: is a democratic intelligence even possible in a world governed by artificial ones?

The Silent Shift from Law to Code

In liberal democracies, norms are supposed to be enacted by elected representatives, made visible through deliberation, and constrained by constitutional frameworks.

But as Giovanni De Gregorio argues, AI systems are now producing de facto norms—shaping behaviors and expectations in ways that bypass traditional legal mechanisms.

When search engines rank results, or when content moderation systems decide what violates “community standards,” they operationalize values.

These values are embedded not in constitutions but in training data, market incentives, and technical constraints. Unlike law, algorithmic rules are not debated, publicized, or interpreted in courts. They are optimized.

As Elkin-Koren explains, these systems increasingly act as regulatory agents in the public sphere, filtering political discourse with minimal transparency.

In doing so, they gradually erode the distinction between governance and infrastructure, substituting code for deliberation.

AI as a Normative Agent Without a Public

The opacity of AI systems underscores their detachment from any form of democratic accountability. As Andreas Jungherr argues, AI technologies increasingly shape the structural preconditions of democratic life: they define how information circulates, how public preferences are formed, and how legitimacy is produced.

What makes this shift especially consequential is the way AI systems reorder the architecture of the public sphere. They influence not only the content of democratic discourse but also the conditions of its emergence.

This involves a redistribution of communicative power, where visibility and influence are no longer mediated by public institutions but by private infrastructures optimized for attention and efficiency.

In such a configuration, trust becomes a substitute for political accountability. Citizens relate to systems that present themselves as neutral or intelligent, not as representatives of collective will. The danger lies in substituting informed consent with silent acquiescence, and political legitimacy with procedural credibility.

This transformation reshapes the very structure of the demos. AI systems mediate who speaks, who is heard, and what is knowable. They shape the epistemic and affective environment in which political agency takes form.

Before a citizen can act, the terrain of possible action has already been algorithmically filtered. That is what makes the challenge so urgent: the democratic subject is being redefined from below, by infrastructures that were never designed to support self-government.

The Rise of the Rule of Tech

Traditional democratic theory is based on the idea that the rules can be contested, interpreted, and changed by those they govern. But machine learning models are not interpretable in this way. Their logic is probabilistic, their feedback loops obscure, and their optimization criteria invisible to most users.

AI systems do not simply shape our tools of governance—they reshape the expectations of governance itself.

This generates what De Gregorio calls a new form of normative power—not coercive, but architectural. It structures behavior without recourse to justification. The risk is not just authoritarianism, but a quiet reengineering of the public sphere through technical systems that claim neutrality while enforcing norms.

When this power intersects with existing social inequalities, the effects are amplified. As Spencer Overton documents in Overcoming Racial Harms to Democracy, algorithmic systems can reproduce and intensify racial biases, undermining the very promise of equal citizenship. And because these systems operate at scale, their impact is both widespread and difficult to contest.

Is Democratic AI Possible?

Some scholars argue for the development of democratic AI systems: transparent, accountable, participatory.

But even well-intentioned governance frameworks often lack enforceability.

Voluntary codes and ethical guidelines rarely confront the structural asymmetries between private tech power and public oversight.

The challenge is not just to make AI fairer, but to make its normativity contestable.

That means designing systems that do not just output decisions, but expose the values embedded within them. It means embedding rights not only in legal texts, but in technical architectures. It may also mean rethinking constitutionalism itself.

If AI is to be democratized, the political community must regain control over the infrastructures that shape public life. Otherwise, democratic values risk being simulated, not enacted.

Conclusion: The Struggle for Normative Sovereignty

AI systems do not simply shape our tools of governance—they reshape the expectations of governance itself.

As their influence grows, they redefine what counts as legitimate participation, what is considered rational debate, and which forms of dissent are visible or silenced.

This redefinition occurs outside the realm of public contestation, without constitutional framing or democratic deliberation.

In this way, AI’s rise introduces a new kind of sovereignty—one that is technical, infrastructural, and insulated from the very principles of collective self-rule.

The resilience of democracy in this context hinges on our capacity to recognize the political nature of AI’s normative influence and to establish institutional mechanisms that can challenge, constrain, and redirect that influence.

At stake is the authority to determine how AI systems should operate—and under whose normative frameworks.